Improving Visual Speech Enhancement Network by Learning Audio-visual Affinity with Multi-head Attention

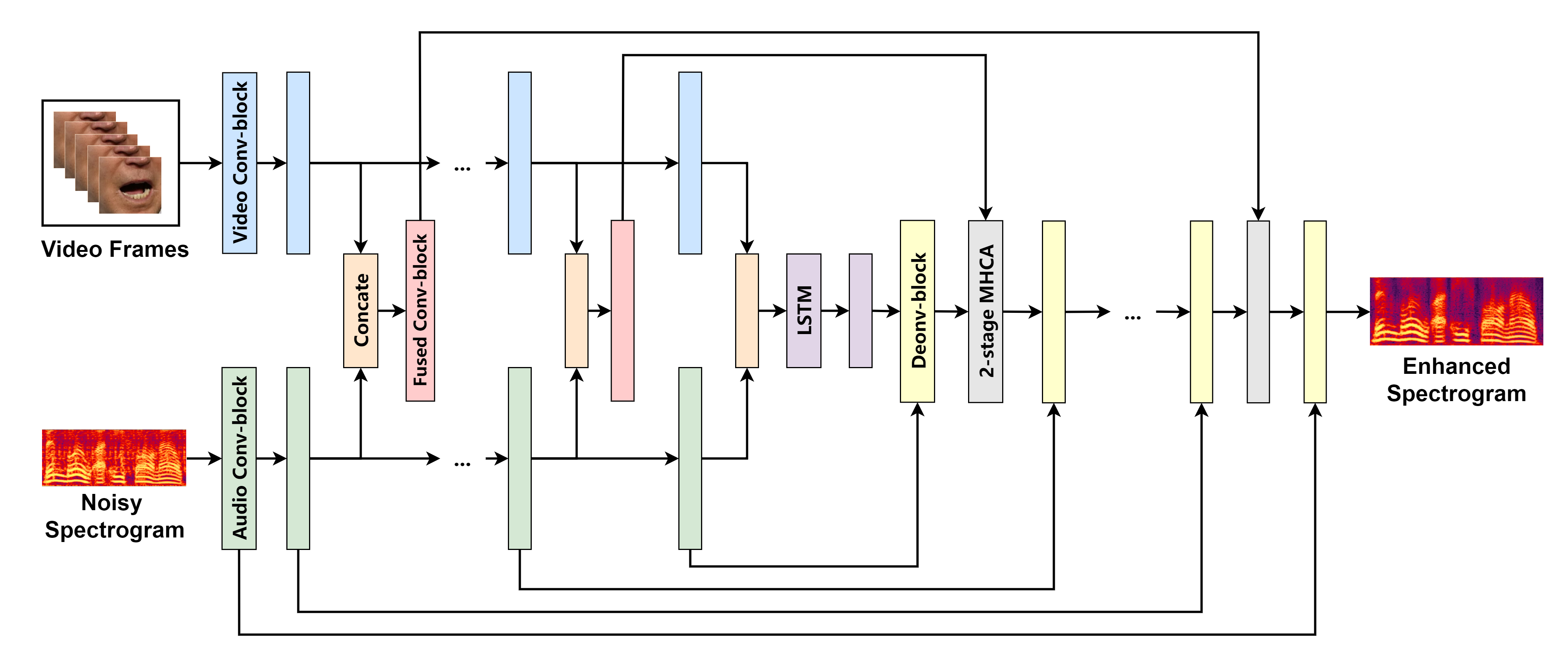

Audio-visual speech enhancement system is regarded to be one of promising solutions for isolating and enhancing speech of desired speaker. Conventional methods focus on predicting clean speech spectrum via a naive convolution neural network based encoder-decoder architecture, and these methods a) are not adequate to use data fully, b) are unable to effectively balance audio-visual features. The proposed model addresses these drawbacks, by a) applying a model that fuses audio and visual features layer by layer in encoding phase, and that feeds fused audio-visual features to each corresponding decoder layer, and more importantly, b) introducing a 2-stage multi-head cross attention (MHCA) mechanism to infer audio-visual speech enhancement for balancing the fused audio-visual features and eliminating irrelevant features. This paper proposes attentional audio-visual multi-layer feature fusion model, in which MHCA units are applied on feature mapping at every layer of decoder. The proposed model demonstrates the superior performance of the network against the state-of-the-art models.

Dataset

- We used the TCD-TIMIT dataset and GRID Corpus dataset.

Experiment Results

Male speech + ambient noise

| Noisy | Enhanced-AVCRN | Enhanced-MHCA-AVCRN | |

|---|---|---|---|

| Sample |

Female speech + ambient noise

| Noisy | Enhanced-AVCRN | Enhanced-MHCA-AVCRN | |

|---|---|---|---|

| Sample |

Female speech + unknown talker speech

| Mixture | Enhanced-AVCRN | Enhanced-MHCA-AVCRN | |

|---|---|---|---|

| Sample |

Male speech + unknown talker speech

| Mixture | Enhanced-AVCRN | Enhanced-MHCA-AVCRN | |

|---|---|---|---|

| Sample |

NOTE:

- If you want to cite this paper, try this: Xinmeng Xu, Yang Wang, Jie Jia, Binbin Chen, Dejun Li "Improving Visual Speech Enhancement Network by Learning Audio-visual Affinity with Multi-head Attention"